HOW TO: Automate Batch Deletion of SQL Server Records

Using a single DELETE statement to remove 20 million+ records from SQL Server is a bad idea. Here's a better approach.

I wrote yesterday about four different approaches to deleting a large number (one million or more) of records from a single table in SQL Server:

- BAD #1: Deleting From a Linked Table in MS Access

- BAD #2: Using the T-SQL

DELETEStatement to Remove Millions of Rows at Once - GOOD #1: Using the T-SQL

TRUNCATE TABLEstatement - GOOD #2: Using T-SQL

DELETEto remove records in batches

Not everyone was convinced:

I disagree. Breaking up the Delete into multiple batches is not a good solution. Instead of one transaction, as it should be, you then get multiple.

— Philipp Stiefel (@philivc) May 25, 2022

Did you compare performance? I doubt you'll gain much if anything at all by that.

Access MVP Philipp Stiefel challenged my conclusions on Twitter. And for good reason! I never really explained why I prefer deleting records in batches.

Rather than launch into a theoretical defense of my reasoning, let me instead take you along on the journey that prompted my earlier article in the first place.

Logging Gone Wild

One of my clients uses an SIEM (security information and event management) tool called EventSentry.

Among other things, EventSentry writes Windows Events to tables in SQL Server. Unless you take steps to remove these entries, they are preserved indefinitely. This information is very useful in the event of a cyberattack as it can help you piece together what happened after the fact.

The event data loses its value with time. You really don't need to know that Ted from Accounting entered the wrong password when he tried to log on five years ago.

Long story short, the database had millions of records with no remaining value. The four biggest tables each held 20 million+ records.

I'm in the middle of migrating this database to a new instance of SQL Server. As part of that move, I wanted to bring these tables under control using a three-step process:

- Purge old records that no longer provide business value

- Backup and restore the database from the old server to the new one

- Shrink the database to reclaim the free space

- Schedule a regular purge of old data to prevent this from happening again

This article is all about executing Step 1.

Deleting 150 Million Records in One Shot

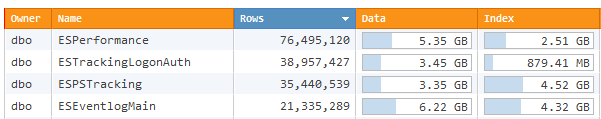

Here's a listing of the four largest tables in the database:

The events in the tables above go back more than ten years.

There's probably no need to keep the data more than about 90 days. I'm conservative by nature, though, so I decided to keep at least two years' worth of data from each table.

My first attempt was "BAD OPTION #2" from above. I tried running the following T-SQL statements from SSMS:

DECLARE @cutoff datetime = '2020-01-01'

DELETE FROM ESEventlogMain WHERE eventtime < @cutoff;

DELETE FROM ESTrackingLogonAuth WHERE recorddate < @cutoff;

DELETE FROM ESPerformance WHERE recorddate < @cutoff;

DELETE FROM ESPSTracking WHERE start_datetime < @cutoff;When I tried executing those massive DELETE statements, the server just hung with no indication of how much progress it had made.

After 20 minutes with no feedback, I ended up canceling the query in SSMS. The cancel operation took an additional 30 minutes to complete.

After nearly an hour of waiting, I was right back where I started–no records deleted and no idea how long it might take for the entire DELETE operation to complete.

NOTE: One thing I did not try that would have helped would be to run the DELETE query for only one table at a time. It's possible that SQL Server was 80% through deleting records from the last table when I canceled the query. It's also possible it was only 10% through the first table. That's the real downside to the massive, single-statement DELETE query approach.

Deleting Records in Batches

I needed a different approach.

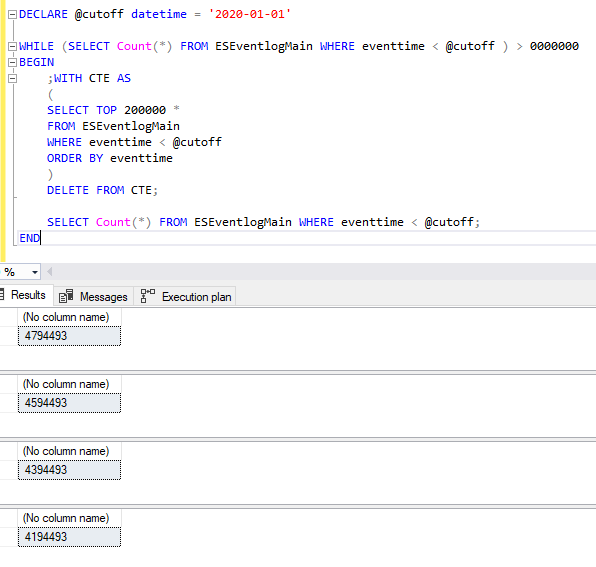

Here's how the below T-SQL works in plain English:

- Set a cutoff date (only records from before this date will be deleted)

- Loop until all records from prior to the cutoff date have been deleted

- Delete the 200K oldest records from the table

- Show the current count of records to help track progress

DECLARE @cutoff datetime = '2020-01-01'

WHILE (SELECT Count(*) FROM ESEventlogMain WHERE eventtime < @cutoff ) > 0000000 -- Initially set to 11000000 while testing

BEGIN

;WITH CTE AS

(

SELECT TOP 200000 *

FROM ESEventlogMain

WHERE eventtime < @cutoff

ORDER BY eventtime

)

DELETE FROM CTE;

SELECT Count(*) FROM ESEventlogMain WHERE eventtime < @cutoff;

ENDAnd this is what it looked like while executing in SSMS:

Stop and Go: Picking Up Where You Left Off

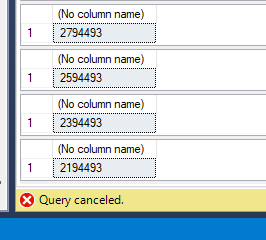

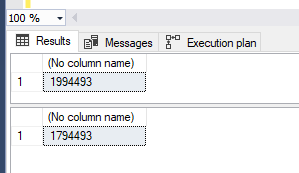

One of the advantages of this method is you can cancel query execution and the cancelation takes less than a minute (versus 30+ minutes the last time). The execution also picks up where it left off.

This image is a screenshot of what happened after I clicked the cancel button in SSMS:

This image is a screenshot shortly after I pressed F5 to re-execute the T-SQL after the earlier cancellation.

Choosing an Appropriate Batch Record Count

The optimal number of records to delete each time through the loop may vary significantly from table to table.

My personal preference is to pick a number of records such that it takes about 30 seconds to execute each DELETE query. I feel this is a good balance between keeping SSMS responsive/allowing for interruptions and quick rollbacks versus negatively impacting performance by running an excessive number of queries. The total amount of time is heavily dependent on the number of indexes in the table, whether the fields we're using to filter and/or sort on are indexed, etc.

I use 100,000 records as a starting point for my initial timing test.

Depending on how long it takes to delete those 100K records, I adjust the record count to meet my 30-second target. This approach is easiest to understand with an example:

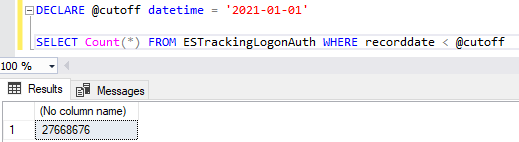

Step 1. Get record count

There are 27,668,676 records to be deleted.

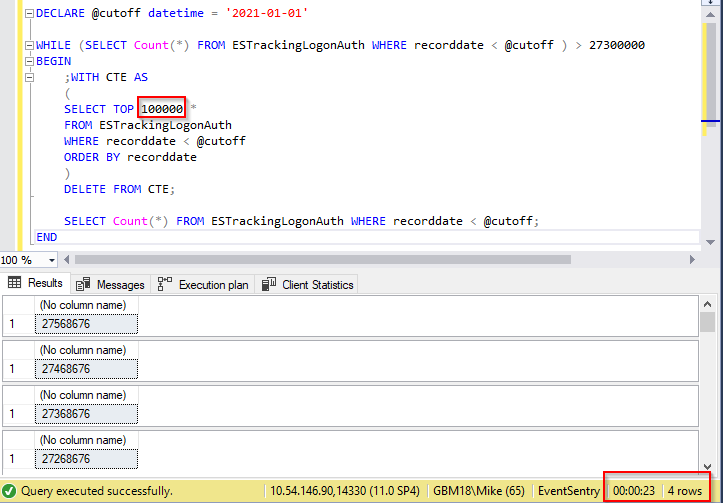

Step 2. Run speed test

It took 23 seconds to delete 400K rows.

We should be able to delete about 500K rows in 30 seconds ( 30 / 23 * 400,000 = 521,739).

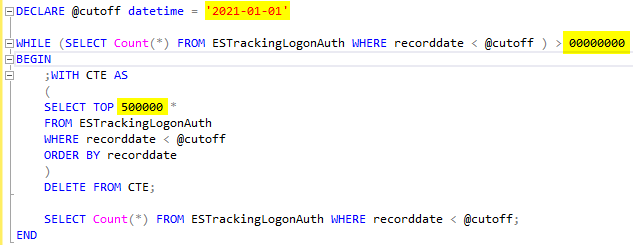

Step 3. DELETE the rows in batches

I'm going to delete:

- Records in 2020 and earlier (< '2021-01-01')

- All records ( WHILE COUNT > 0)

- In batches of 500,000

Customizing the Solution

Obviously, your table and field names will be different than the ones I used in my example above.

You will need to make (at minimum) the following changes:

- Change

ESTrackingLogonAuthto the name of the table you want to DELETE from - Change

recorddateto the name of the field you want to use for filtering - Change

datetimeand'2021-01-01'to match the data type and cutoff value of the field you are using for filtering - You may want to switch the direction of the

ORDER BYstatement (i.e., addDESC), depending on the nature of the filtering field

Referenced articles

Image by Dirk Rabe from Pixabay

UPDATED [2022-05-26]: Fixed broken images at bottom of article (thanks for the heads up, IvenBach!).